LoRA and DoRA Implementation from Scratch

I built a retrieval pipeline with LlamaIndex, Elasticsearch, and Llama3 to test local deployment workflows.

I implemented LoRA and DoRA from scratch in PyTorch to understand the methods end to end.

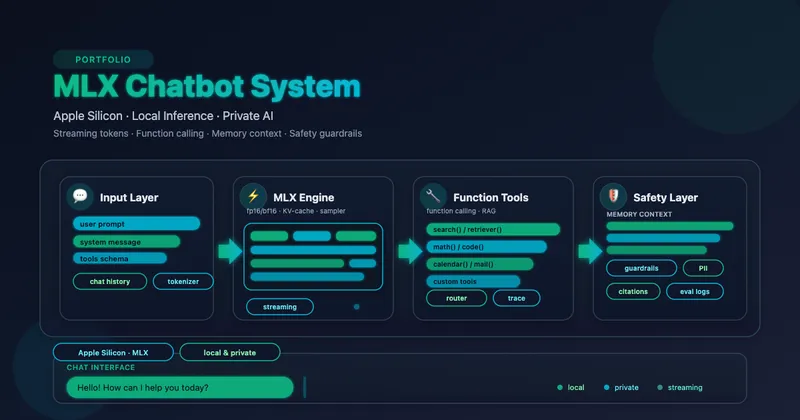

I explored chat tooling on Apple Silicon using MLX to understand the runtime and packaging story.